The Evolving Reality Beyond the Hype

Generative AI has been the darling of the tech world for several years now. From creating art to generating realistic human-like text, the capabilities of these systems have captured the imagination of the public and professionals alike. But as we operationalize generative AI (Gen AI) and expand its use cases, a new narrative is emerging. Some argue that Gen AI is getting “dumber.” Is this true? Or are we simply witnessing the natural evolution of technology as the initial hype wears off and reality sets in?

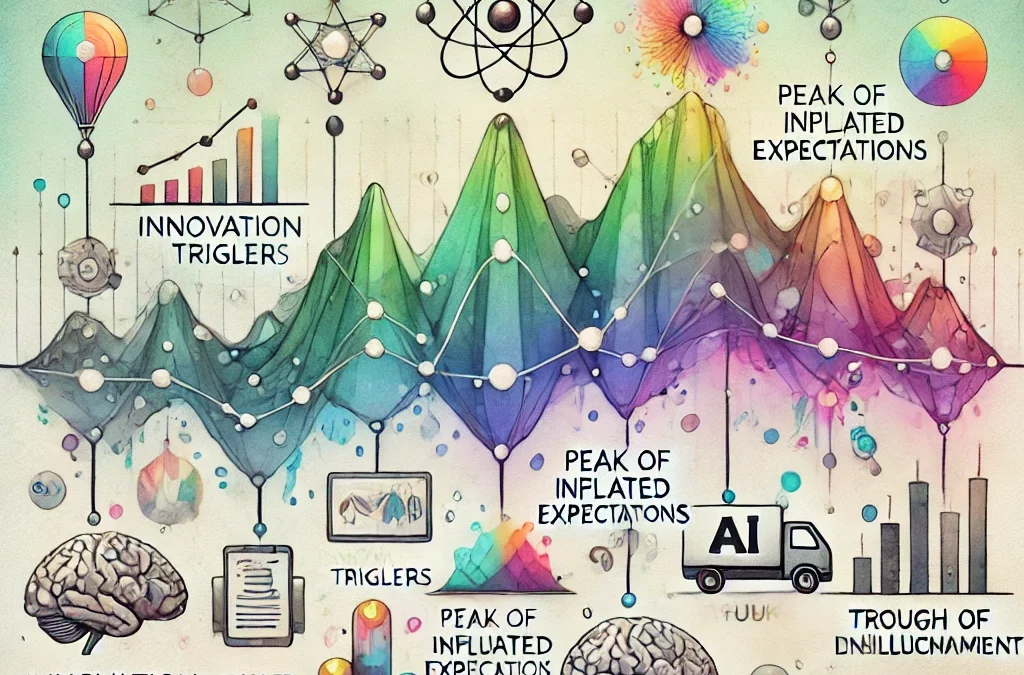

The Hype Cycle: From Peak to Trough

To understand this phenomenon, it’s essential to refer to Gartner’s Hype Cycle, a graphical representation of the maturity, adoption, and social application of specific technologies. It begins with the “Innovation Trigger,” followed by the “Peak of Inflated Expectations,” where Gen AI has undoubtedly been. Here, early successes and media frenzy create unrealistic expectations.

As the technology becomes more widely adopted, it moves into the “Trough of Disillusionment.” This phase is characterized by a more critical evaluation of the technology’s limitations and challenges. It’s during this stage that people may start to perceive Gen AI as getting “dumber.” However, this perception is less about the technology deteriorating and more about users gaining a more nuanced understanding of its capabilities and limitations.

The Operationalization Challenge

When generative AI was first introduced, it dazzled us with its potential. But as companies and individuals attempt to operationalize it, the complexities and limitations become apparent. For example, creating coherent and contextually appropriate text isn’t always straightforward. Models like GPT-4 are incredibly sophisticated, but they are still prone to errors, biases, and generating outputs that may be plausible-sounding but factually incorrect or nonsensical.

The process of integrating Gen AI into real-world applications often reveals these shortcomings. For instance, customer service bots need to handle a wide range of queries accurately and consistently. Initial prototypes might work well in controlled environments, but in the chaotic reality of customer interactions, the flaws become more evident.

The Limitations of Large Language Models

One major criticism is that while Gen AI models have vast amounts of data and parameters, they lack true understanding. They are essentially sophisticated pattern-matching systems. This limitation becomes evident when the context or nuance of a conversation changes. A human can understand and adapt to these changes naturally, but a Gen AI model may struggle, leading to errors that can seem “dumb.”

Moreover, the issue of bias in AI outputs has been a persistent problem. These models learn from vast datasets that include the biases present in human language. Efforts to mitigate these biases are ongoing, but they are far from perfect. This contributes to the perception that Gen AI is less intelligent than initially believed when it produces biased or inappropriate content.

Realizing the Potential: Beyond the Hype

Despite these challenges, it’s essential to recognize that generative AI has not actually become less capable. Instead, the broader tech community and the public are becoming more sophisticated in their understanding of what Gen AI can and cannot do. This shift is critical for the technology’s long-term success.

Narrowing the Focus

One way to address the limitations is to narrow the focus of Gen AI applications. Specialized models trained on domain-specific data can perform much better than general-purpose models. For example, a Gen AI model trained exclusively on medical data can provide more accurate and reliable outputs in a healthcare context than a general model like GPT-4.

Human-AI Collaboration

Another promising avenue is the emphasis on human-AI collaboration. Instead of viewing Gen AI as a replacement for human intelligence, we should see it as a tool that can augment human capabilities. By leveraging the strengths of both humans and AI, we can achieve outcomes that neither could accomplish alone. For instance, in creative industries, AI can generate a multitude of ideas, but a human can curate and refine these ideas into something truly exceptional.

Continuous Improvement and Feedback

Operationalizing Gen AI also involves a continuous cycle of feedback and improvement. Companies must invest in monitoring AI outputs, identifying errors, and updating models regularly. This iterative process ensures that AI systems evolve and improve over time, rather than stagnating.

The Road Ahead

As we move beyond the initial excitement and hype, it’s clear that generative AI is not getting dumber. Instead, we are entering a phase of more realistic and grounded expectations. This transition is crucial for the technology to mature and integrate meaningfully into various industries.

The future of Gen AI lies in our ability to recognize and address its limitations while continuing to push the boundaries of what is possible. By focusing on specific applications, fostering human-AI collaboration, and committing to continuous improvement, we can unlock the full potential of generative AI and ensure it remains a powerful and valuable tool in our technological arsenal.

While the perception of Gen AI’s capabilities may shift as we move through the hype cycle, the technology itself continues to advance. The key is to embrace this evolution, recognizing that maturity and sophistication come from understanding and addressing both the strengths and limitations of generative AI.